In the last blog post we talked about the shortcomings of HTTP/1.1 and the magical promises of HTTP/2. But how does that actually work under the hood? By the end of the first part of the “HTTP/2 - How?” posts, you’ll understand exactly how HTTP/2 implements the binary frames that are the basic units of the protocol, how stream multiplexing works, what are the different types of frames HTTP/2 uses, what are their purpose and we will wrap everything up with understanding the protocol flow of HTTP/2.

The Game Plan

We will learn how HTTP/2 works like learning a new language - first the alphabet (frames), then words (streams), then sentences (complete protocol flows).

I’ll keep is super practical. No unnecessary theory - just the knowledge you need to understand and debug HTTP/2 in the real world.

Binary Protocol & Framing - The Foundation

What do I mean by “binary protocol”? I mean that HTTP/2 threw out HTTP/1.1’s text-based approach entirely. No more parsing “GET /api/users HTTP/1.1\r\n” line by line. Instead, everything travels in these little packages called binary frames.

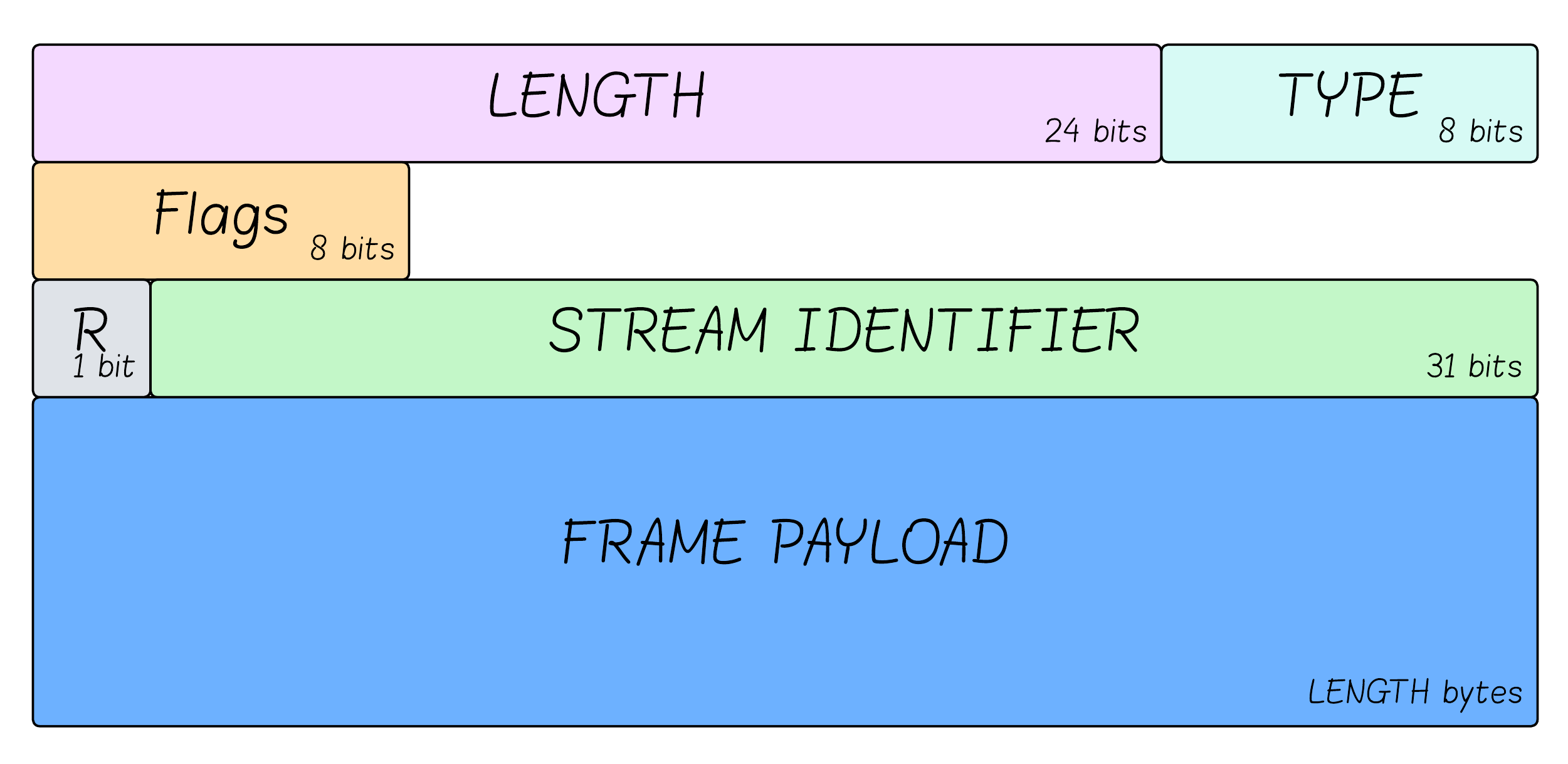

Each frame has:

- Length: The length of the frame payload in bytes (Usually <16KB)

- Type: The type of frame (HEADERS, DATA, SETTINGS, etc.), which determines the format and semantics of the frame.

- Flags: Flags that are specific to the frame type (For example END_STREAM and END_HEADERS in HEADERS frame)

- Reserved: Unused.

- Stream ID: With which stream is the frame associated? Frames with stream ID = 0 are associated with the connection as a whole, as opposed to individual streams (for example - SETTINGS frames, that are used to declare on configuration for the connection are sent over stream 0).

- Frame Payload: The content of the frame. Each frame type has a different structure.

It’s a big improvement compared to HTTP/1.1 since there are no more guessing where one request ends and another begins. The server reads the length, jumps straight to the payload, processes it, and moves on. It’s like the difference between reading a book where sentences have random lengths versus reading a telegram where each message is clearly marked with its length upfront.

Streams - Virtual Conversations

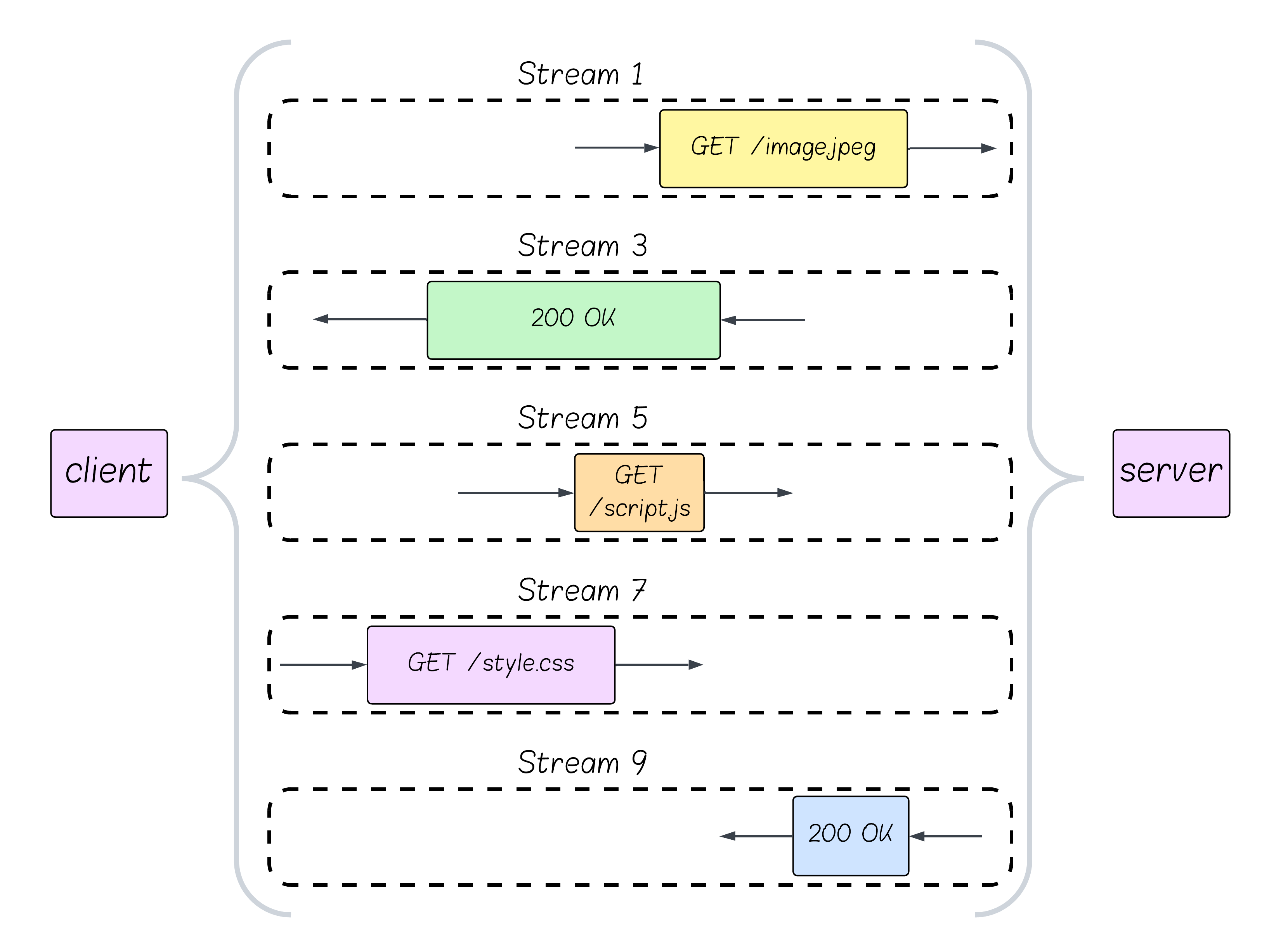

Here’s where HTTP/2 gets clever. A stream is essentially a virtual conversation happening over your single TCP connection. Want to fetch /api/users? That’s Stream 1. Need /styles.css at the same time? That’s Stream 3. Both are chatting away on the same TCP connection, but they’re completely separate conversations.

Each request (and response) is sent over a stream. We generally refer to them as “HTTP Messages” - and they have the exact same semantics as in HTTP/1.x. Every HTTP message is comprised of a headers part and a payload part. We will later see how it is built using HTTP/2 frames.

Stream IDs follow simple rules:

- Odd numbers (1, 3, 5, 7…): “Hey server, I’m starting this conversation”

- Even numbers (2, 4, 6, 8…): “Hey client, I’m pushing this to you” (server push)

- Stream 0: Reserved for “let’s talk about our connection itself”

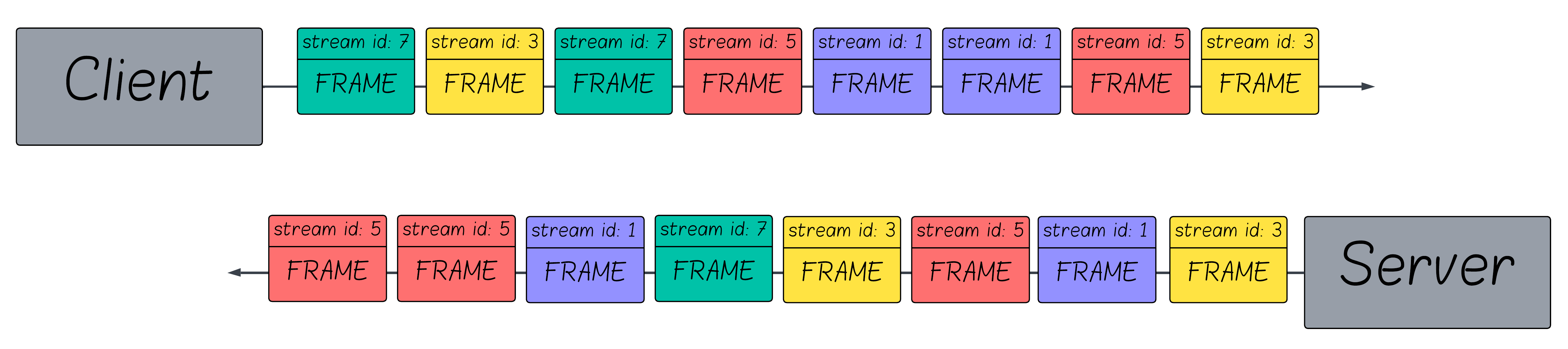

Multiple streams interleave their frames over the same TCP connection. When frames arrive, the receiver looks at the Stream ID and says “oh, this frame belongs to the CSS request” or “this one’s for the HTML request.”

When looking at a TCP connection, it will look something like that:

The Stream Lifecycle

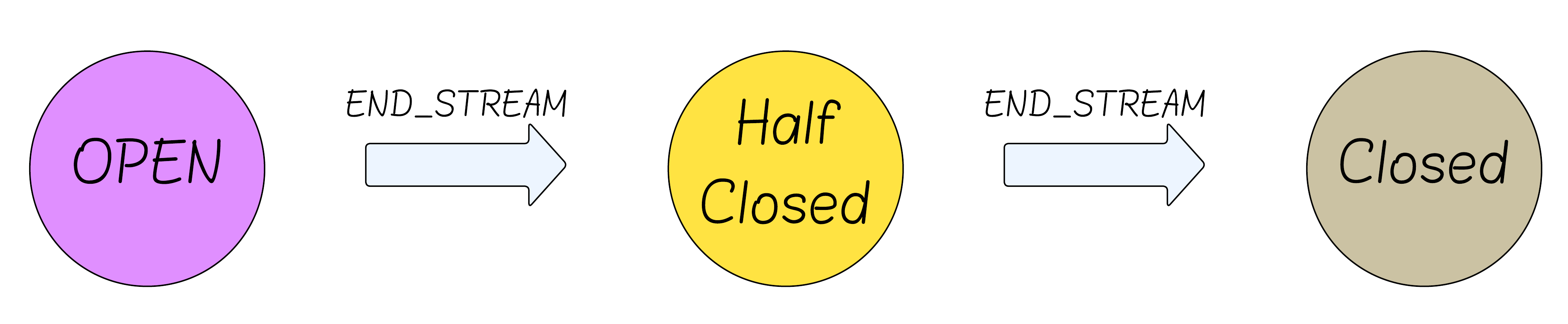

Streams have a pretty straightforward life:

- Open: “Let’s chat! Both of us can send messages”

- Half-Closed: “I’m done talking, but you can keep going”

- Closed: “Conversation over, let’s clean up” (when both sides finished talking)

There’s also an “Idle” state for streams that haven’t started yet, but honestly, it’s redundant.

An endpoint declares it’s finished sending data on a specific stream by using the END_STREAM flag which is part of the HTTP messages frames. For a stream to close we need both endpoints to declare (using the END_STREAM flag) they are done talking.

The reason we need the END_STREAM flag is so that each endpoint will know when the other side has finished sending it’s HTTP message. If a server gets the headers of a request, how will it know if there is payload that needs to arrive as well? That’s the purpose of the END_STREAM flag.

This is also why be design of the protocol, each stream can only have one request and one response.

It might be a little confusing at first, but remember that the END_STREAM flag doesn’t really end the stream, it only ends the sending side of the sender.

Frame Types

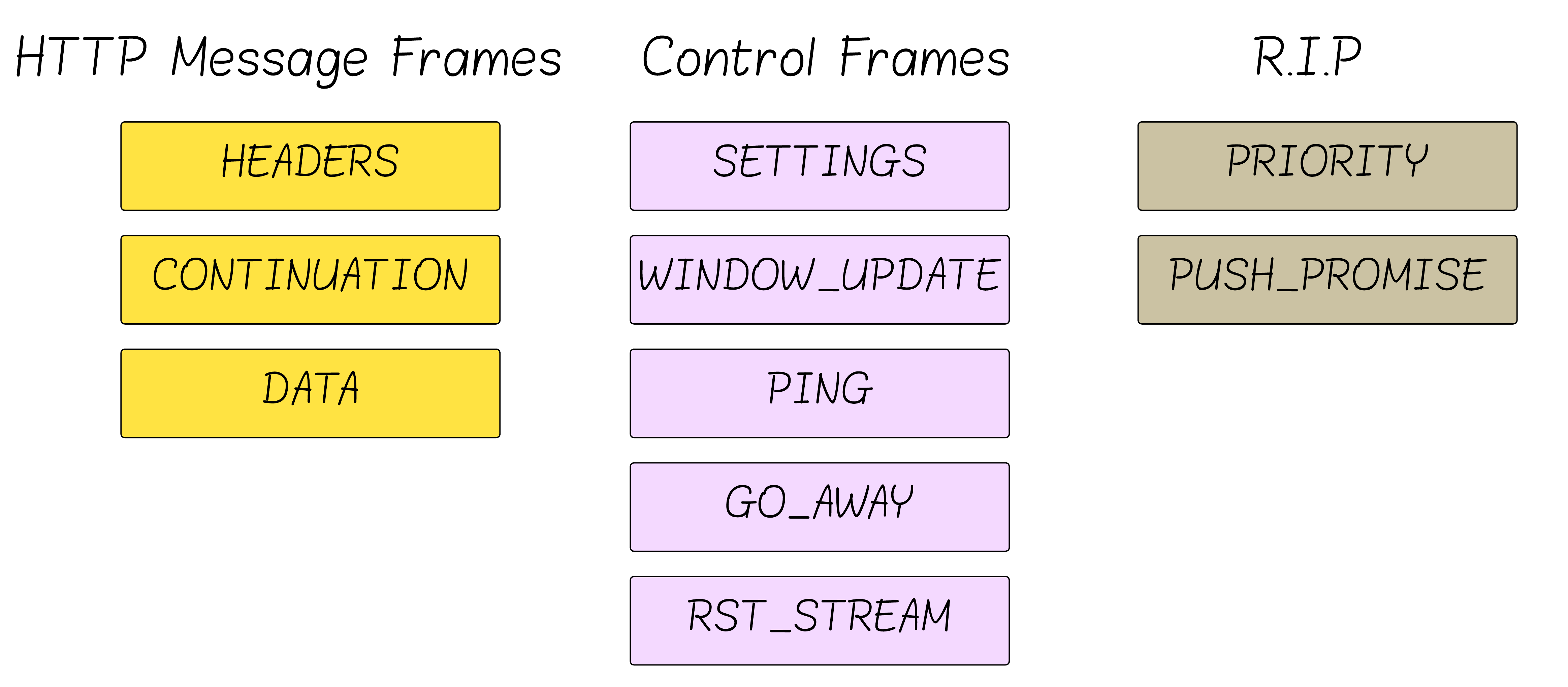

HTTP/2 defines 10 frame types, I’ve organized them into 3 groups:

HTTP Message Frames:

- HEADERS: The HTTP headers (method, path, status, …) part of an HTTP message (request or response)

- DATA: The payload of the HTTP message (HTML, JSON, JPEG, GIF)

- CONTINUATION: Continuation of the HTTP headers, if the headers couldn’t fit inside 1 frame (basically, “My headers were too big for one frame, here’s the rest”)

Control Frames:

- SETTINGS: Contains configurations parameters the the receiving side should apply.

- WINDOW_UPDATE: Control flow credit (like a TCP window) - can be per stream or for the entire connection.

- PING: pong.

- GOAWAY: Used to shut down the connection gracefully

- RST_STREAM: Cancel / terminate a stream immediately.

Deprecated/Unused:

- PRIORITY: Stream priority (deprecated - the RFC gave up on this)

- PUSH_PROMISE: Server push announcement (browsers killed it in 2022)

In practice - HEADERS, DATA, SETTINGS, and WINDOW_UPDATE handle about 95% of what you’ll see in the wild.

Let me break down the three control frames that actually do interesting stuff:

Control Frames

SETTINGS - “One Frame, Everybody Knows The Rules”

The SETTINGS frame describe configurations parameters used by the endpoint that sent it.

Those parameters put constraints on the behavior of the other endpoint.

Whenever an endpoint “publish” new SETTINGS for the conversation (new parameters values), the other side needs to accept it (using a SETTINGS ACK frame).

Common negotiations:

SETTINGS_MAX_FRAME_SIZE: “the frames I accept can be up to 1MB” (default is 16KB)SETTINGS_INITIAL_WINDOW_SIZE: “You can send me up to 1MB before waiting for my ‘you can keep going’” (default 64KB)SETTINGS_MAX_CONCURRENT_STREAMS: “I can handle 100 conversations at once”SETTINGS_HEADER_TABLE_SIZE: “My HPACK table can hold 8KB” (default 4KB)

It’s important to note that SETTINGS is not a negotiation (like in TLS), but a declaration - made by either side. The reasons it’s a per side configuration could be summarized by two argument which are 2 sides of the same coin:

- An endpoint can’t change the limitation the other side sent - which makes sense: if I told you I am willing to receive only 10 concurrent streams, because my server is overloaded now, it doesn’t make sense for you could be able tell me I am willing to receive 10000.

- SETTINGS applies to only 1 side of the conversation: A client might choose a big initial window size to improve performance and latency. however, A server might choose not as big initial window size so it won’t be flooded by one client. These configurations of each side could be completely different.

WINDOW_UPDATE - Traffic Control

WINDOW_UPDATE implements HTTP/2’s flow control - preventing fast senders from overwhelming slow receivers. This is different from TCP flow control because it works at both connection (STREAM_ID=0) and stream (STREAM_ID!=0) levels.

Flow control limits only the HTTP message frames (HEADERS, CONTINUATION and DATA) - control frames are not subject to flow control. Why? Control frames handle connection management and must always be able to flow freely. If SETTINGS or WINDOW_UPDATE frames were subject to flow control, you could create deadlocks where the connection can’t negotiate parameters or update flow control windows.

How it works:

- Each stream has a “window” (buffer size) for how much data it can receive

- When sender fills the window, it must stop sending until receiver processes data

- Receiver sends WINDOW_UPDATE to say “you can now send 1000 more”

Why this matters: Prevents memory exhaustion attacks and ensures fair resource sharing between streams - one stream can not take all the bandwidth of the connection.

RST_STREAM

RST_STREAM allows either side to immediately terminate a specific stream without affecting other streams or the connection. It’s like TCP RST but surgical - killing one conversation while others continue.

When it’s used:

- Client cancels a request (user clicks “stop” or navigates away)

- Server rejects a request (malformed headers, rate limiting)

- Stream encounters an error that can’t be recovered

Error codes (some common ones):

NO_ERROR (0): Clean terminationPROTOCOL_ERROR (1): HTTP/2 protocol violationCANCEL (8): User/application cancelledREFUSED_STREAM (7): Server refusing to process

So now if a user starts downloading a large video → clicks “back” button → Browser sends RST_STREAM(CANCEL) → Server stops sending video data → Other streams (CSS, JS) continue normally.

This was impossible in HTTP/1.1 - canceling one request meant killing the entire connection and losing all other in-flight requests.

HTTP Message Frames

Now that we are done talking about how the protocol is built and how can it be controlled - lets dive into the frames that actually create data that the HTTP/2 protocol transmits - HTTP messages.

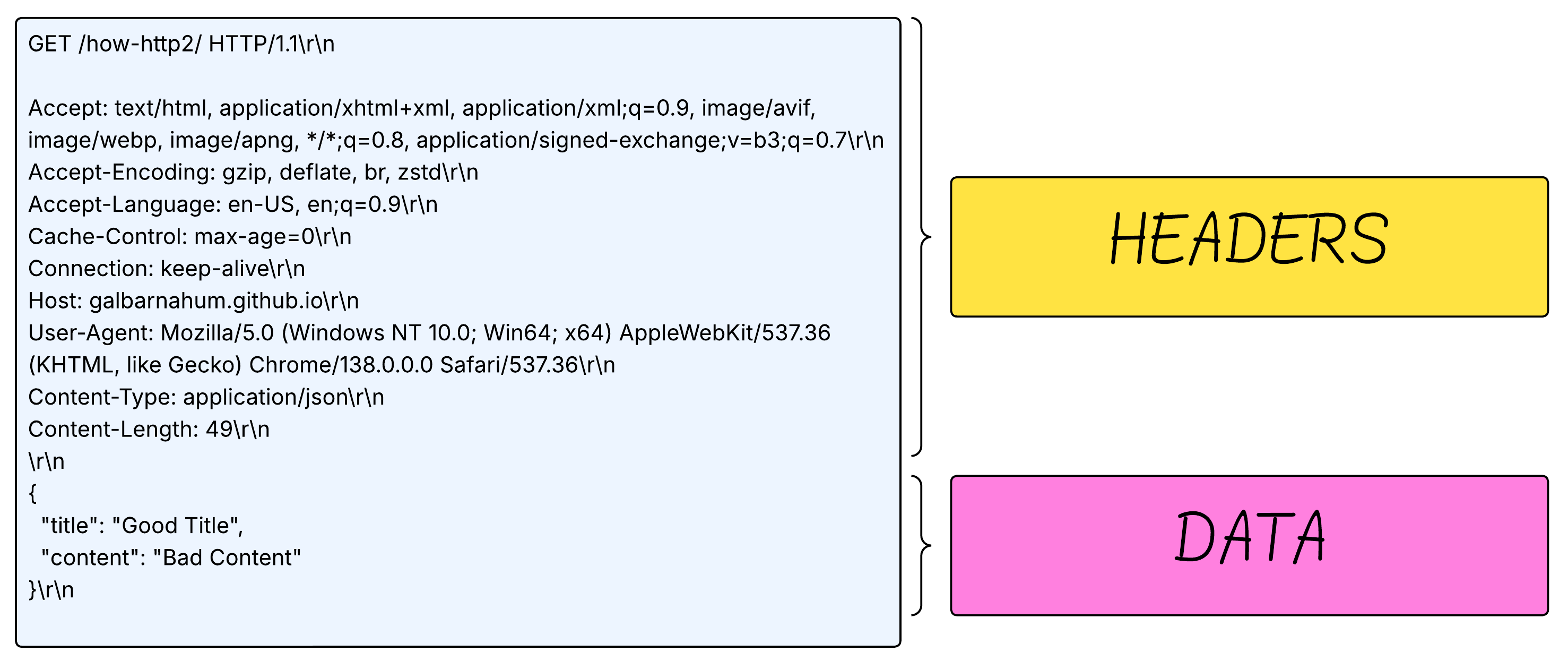

Instead of sending both the headers and the payload of a request as a big message that the server will need to parse, in HTTP/2 they are divided into 2 seperate frames - HEADERS frames (which contain the headers) and DATA frames (which contains the payload).

CONTINUATION frames are used when the headers of a request can’t fit inside a HEADERS frame - so CONTINUATION frame/s are sent immidiately after. Those frames are not common at all - frames size limit is usually 16KB, and having 16KB of headers is quite hard - and we haven’t even talked about HPACK which reduces this size significantly.

These are the important (and not deprecated) fields of the HEADERS frame:

- END_HEADERS - signals that no further HTTP headers (

HEADERS or CONTINUATION frames) will be sent from the peer on that stream. This way the other peer knows those are the headers frames in full.

- END_STREAM - - signals that no further HTTP headers and payload (

HEADERS frames, and DATA frames) will be sent from the peer on that stream. This way the other peer knows this is the complete HTTP message.

The payload of the HEADERS frame contains the headers of the HTTP request. We won’t get into the format now, since it uses HPACK. The good news is that wireshark parses it for you :)

DATA frames

data frames have even less fields. Unlike HEADERS, which are continued by a continuation frame - DATA frames can be followed by another DATA frames, until the entire payload has been sent.

The payload ends when a the END_STREAM of the frame is used.

There is also an option to send trailer headers - which are sent after the payload of the request. In this case the payload will end when a HEADERS frame is sent on the stream.

Protocol Flow

We now have the building blocks of the HTTP/2 protocol. Let’s see how they work together in a real HTTP/2 conversation:

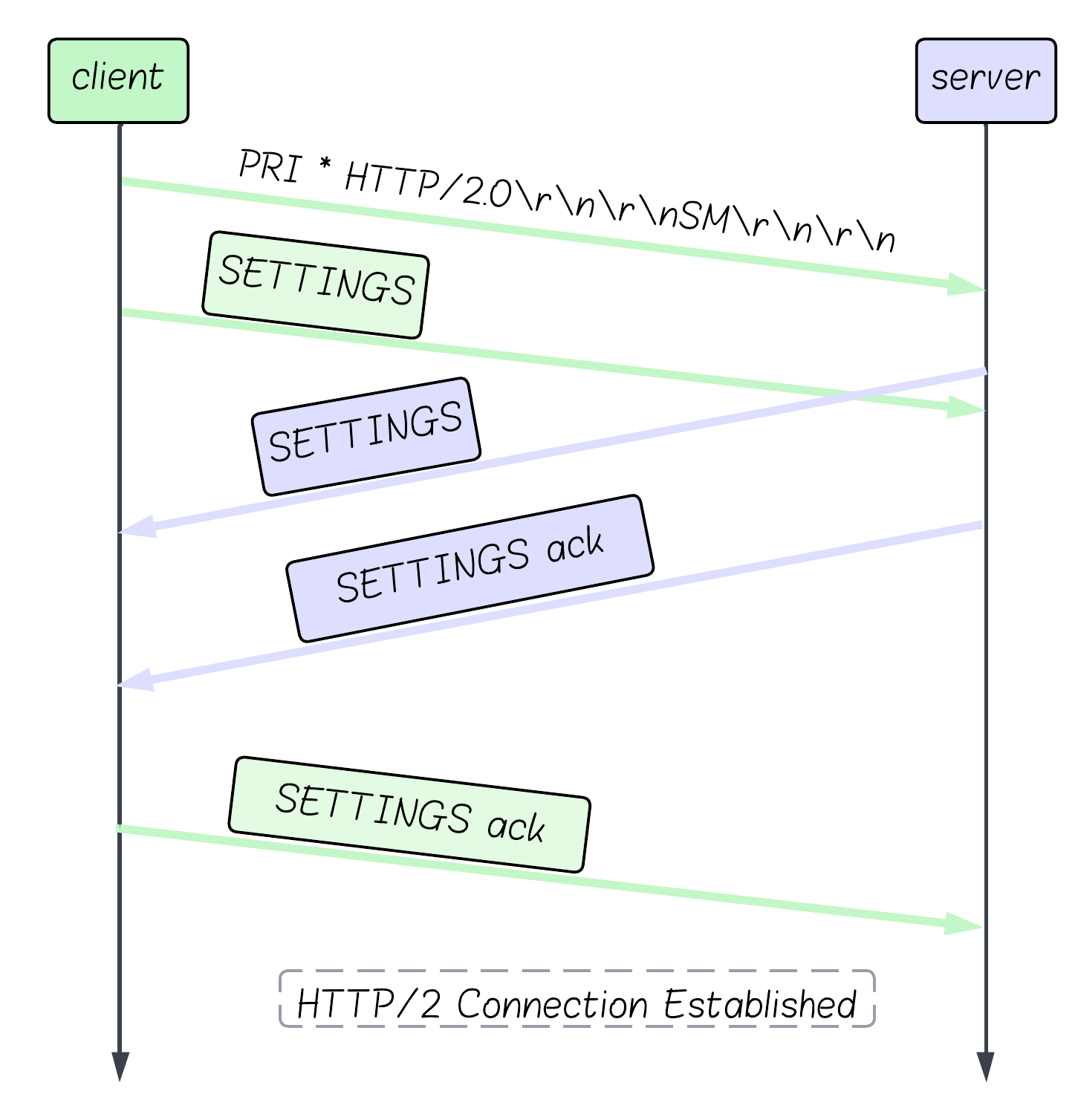

Connection Setup

You should know that HTTP/2 sends a magic that tells the server it uses HTTP/2 and not HTTP/1.1.

It looks something like that:

Note that most of the times, a WINDOW_UPDATE frame will also be sent, to increase the size of the window for the entire connection.